Using Android To Help Stroke Patients

As you may already know, my Wife's aunty had a stroke in July 2015 and this has had a major impact on our family. I won't go into too much detail but suffice to say our aunt has lost most of her ability to speak and move the right side of her body.

Over the months she has shown great improvement and is slowly regaining her strength. But there are times where she is greatly frustrated by her speech, and these are generally the times where we find it the hardest to understand her needs. She is comfortable and being well cared for but sometimes she needs the curtains closing, or a pillow for her back etc. These times it is hard for her to communicate with us.

I had a thought before Christmas...

Could I re-purpose an old Android tablet to be a communication tool and enable her to communicate when frustrated?

So I dug out my old Archos 80 tablet. It's a big tablet with a 4:3 screen but it will do the job.

The next challenge that I faced was...

I don't know how to code an Android app...but I do know how to use MIT App Inventor 2. Could I use that?

Well yes I can. I followed the beginner tutorials on the App Inventor website and learnt how to create a button that will trigger audio to play, basically a soundboard.

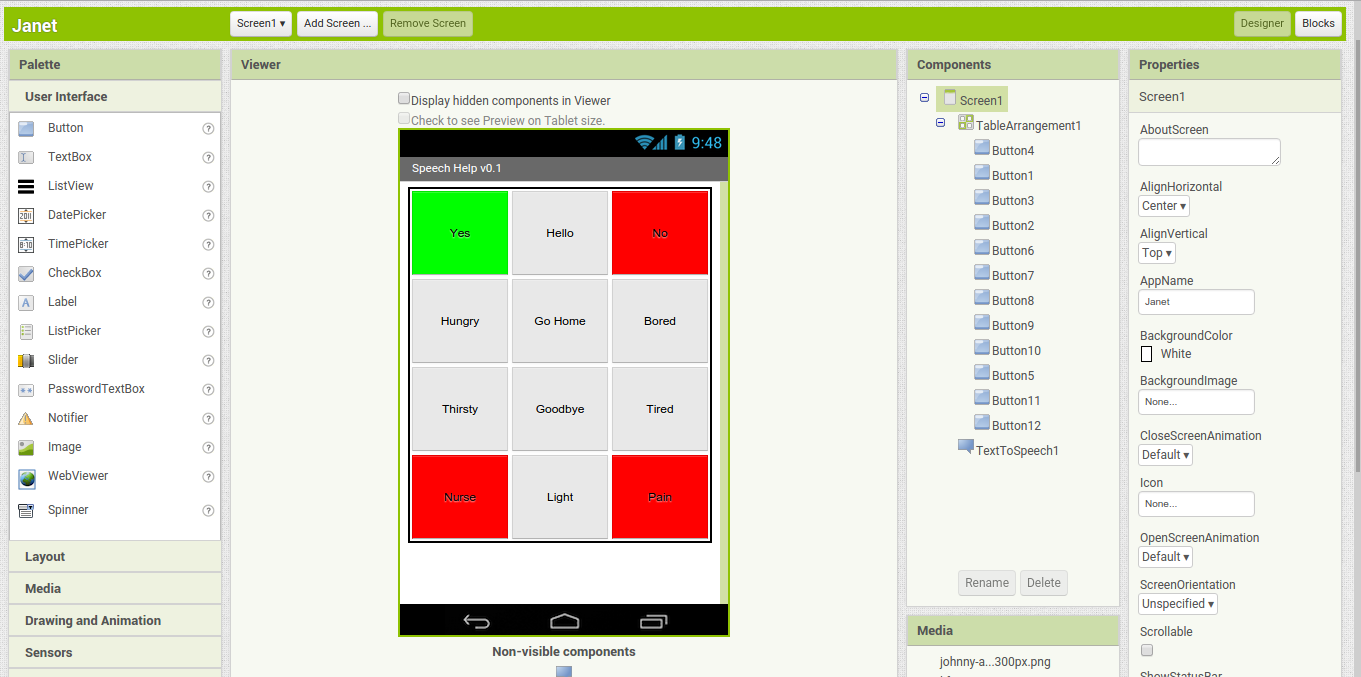

In the Designer view I made up a grid of buttons, using a table to contain the buttons. I colour coded the buttons, with green for yes and red for no. There are also two other red buttons used to indicate an urgent request.

I then used a text to speech functionality to create a series of buttons that would be used for communication. This functionality is a "non-visible component" in other words a component that works behind the scenes.

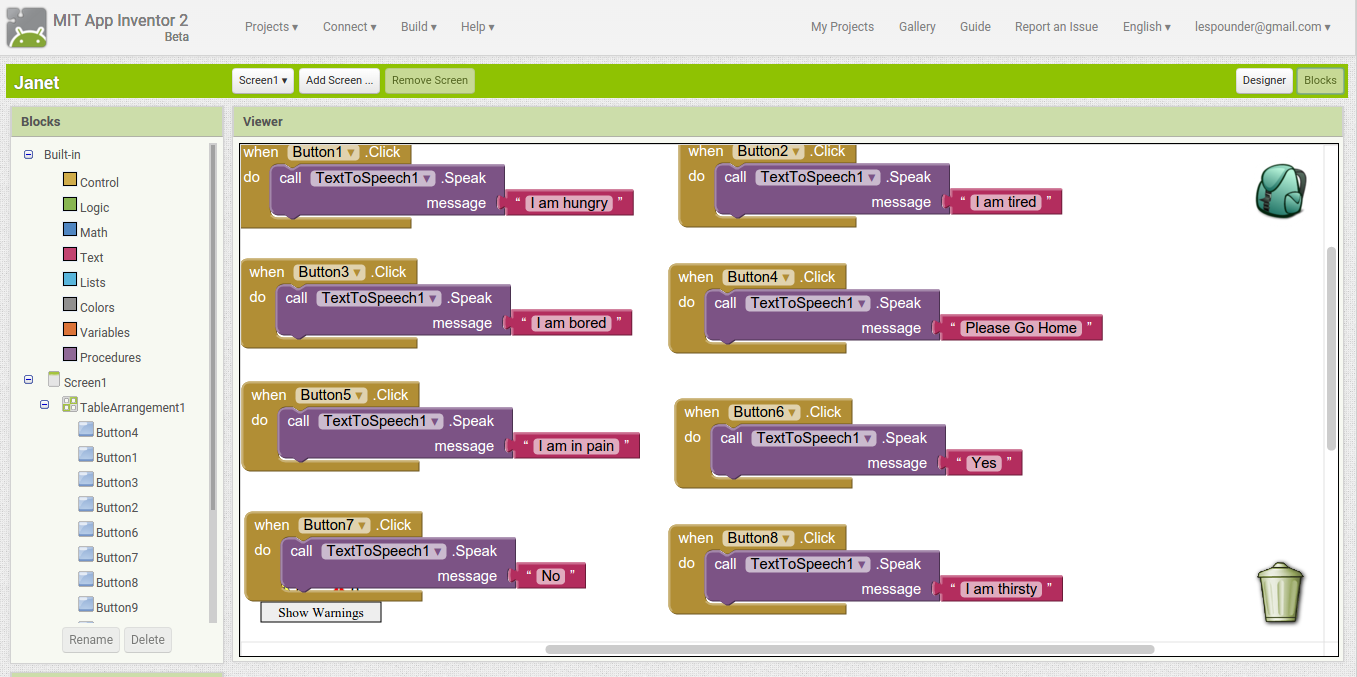

To write code for the buttons we need to switch to the Blocks mode, the button for which is in the top right of the screen.

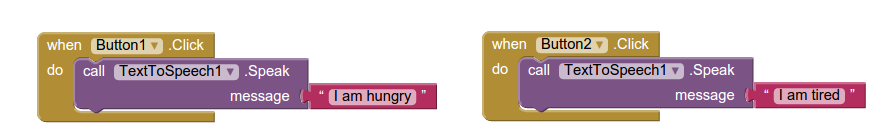

In the Blocks mode we can see a Scratch like interface. It is a little more complex but still relatively easy for a user to build an app. Using a trigger / event of clicking on a button we call the Text to Speech function that will speak a string of text / message.

I duplicated the original sequence of blocks for each of the buttons. I then downloaded an APK, an Android installer, and installed it on my OneplusX running Android 5 and onto my Archos 80 running Android 4.0. The App worked well on both, here's a video of it running on the Archos tablet.

So what's next?

Part of the rehabilitation process is for the patient to learn vowels using certain words. I'd like to expand the application so that this is available to them. With buttons on another screen so that we can keep the app easy to use.

Another expansion is like a game of snap, where the patient must match items and then say their name, for example "Keys". This improves their hand to eye co-ordination and their speech.

It was an interesting experience to build the app, applying skills learnt via other languages and projects into something that will have a real use.